.png)

Note: This article is just one of 60+ sections from our full report titled: The 2024 Legal AI Retrospective - Key Lessons from the Past Year. Please download the full report to check any citations.

Challenge: Defining Accuracy

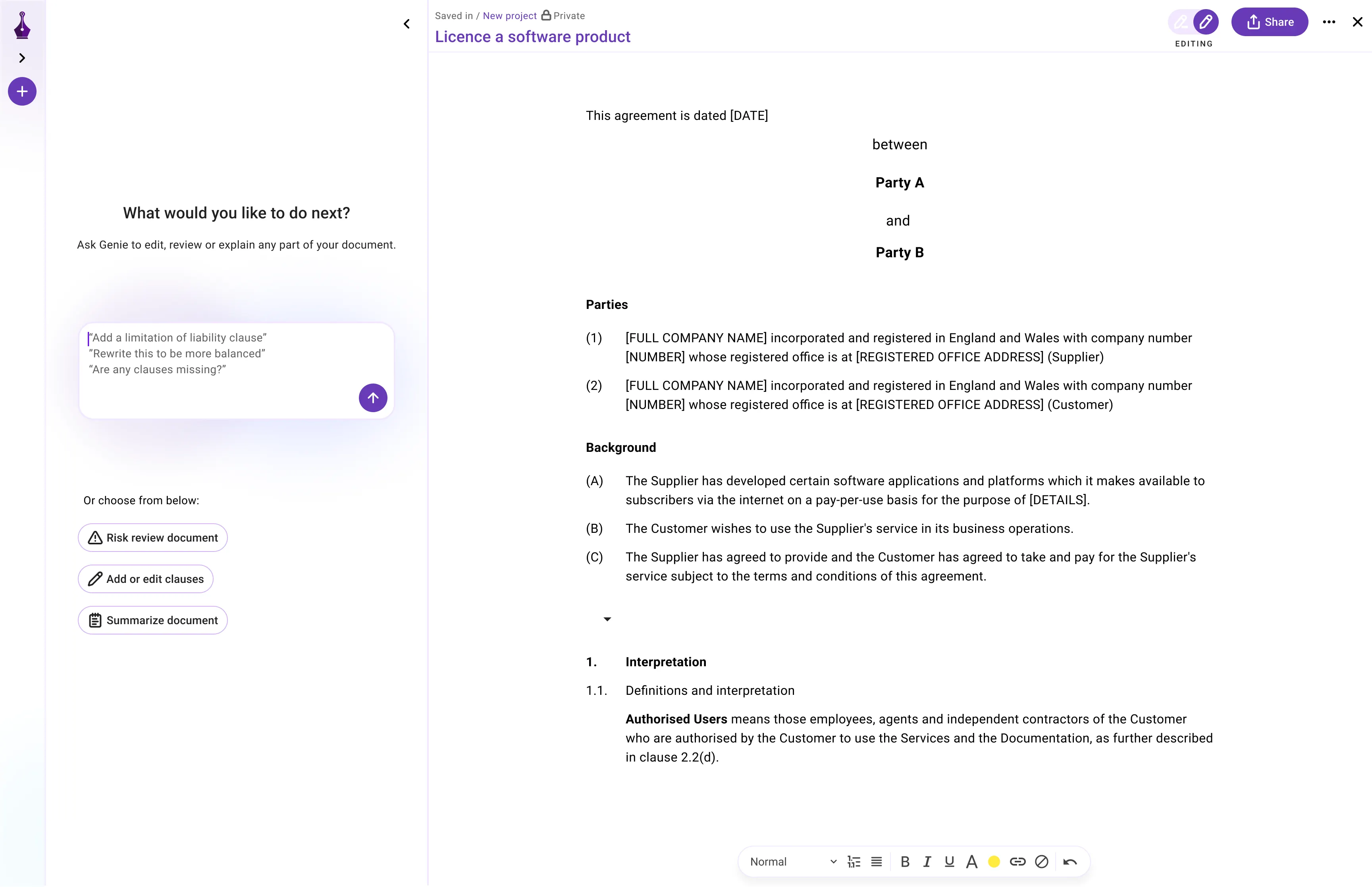

Let's say you wanted to "Investigate whether appending the whole contract to the prompt is the best practice in terms of accuracy and the cost-performance trade-off of doing this."

In order to answer the cost-performance tradeoff point here, you'd need to first define the cost of inaccuracy. Higher risk of dispute, claims, and legal fees associated, or simply the lost hours spent checking work so these other outcomes don't arise?

But that's easier said than done.

"Accuracy is a misleading metric. This has led to a search for different metrics measuring different aspects of Legal AI performance. This is a complex task and there are many classifications metrics to measure different performance nuances (Akosa 2017; Holzmann and Klar 2024). There is unfortunately no one-works-for-all metric. However, the following are some of the most common classification metrics. Precision is the ratio of correctly predicted positive observations to the total predicted positives. It is calculated as: Recall is the ratio of correctly predicted positive observations to all the observations in the actual class. The F1 Score is the harmonic mean of Precision and Recall.

Metrics like precision, recall, and F1 give different results depending on which class is treated as positive and which is treated as negative"

Elifsu Parlan, AI Scientist, UK

Interested in joining our team? Explore career opportunities with us and be a part of the future of Legal AI.

Download our whitepaper on the future of AI in Legal